History of WWW

- The Web/WWW began in 1989 at CERN(The European Organization for Nuclear Research; the largest particle physics laboratory in the world; The name CERN is derived from the acronym for the French “Conseil European pour la Recherche Nucléaire”; located in Geneva, Switzerland; Established on 29 September 1954, 23 Members country, (not India but India as observer country) having a vast community of users, CERN has several accelerators at which large teams of comprising over 12200 scientists of 110 nationalities from institutes in more than 70 countries; here scientists, engineers, IT specialists, human resources specialists, accountants, writers, technicians and many other kinds of people working together to break barriers to achieve the seemingly impossible).

- The Web grew out of the need to have these large teams of internationally dispersed researchers collaborate using a constantly changing collection of reports, blueprints, drawings, photos, and other documents.

- The initial proposal for a web of linked documents came from CERN, a British physicist Tim Berners-Lee in March 1989. The first (text-based) prototype was operational 18 months later. In December 1991, a public demonstration was given at the Hypertext 91 conference in San Antonio, Texas. This demonstration and its attendant publicity caught the attention of other researchers, which led Marc Andreessen at the University of Illinois to start developing the first graphical browser, Mosaic. It was released in February 1993. Mosaic was so popular that a year later, Andreessen left to form a company, Netscape Communications Corp., whose goal was to develop clients, servers, and other Web software.

- In 1994, CERN and M.I.T. signed an agreement setting up the World Wide Web Consortium (sometimes abbreviated as W3C), an organization devoted to further developing the Web, standardizing protocols, and encouraging interoperability between sites, Berners-Lee became the director. Since then, several hundred universities and companies have joined the consortium.

- A NeXT Computer was used by Berners-Lee as the world’s first web server and also to write the first web browser, WorldWideWeb, in 1990.

- On 30 April 1993, CERN announced that the World Wide Web would be free to anyone, with no fees due.

Introduction of Web

- The Internet came first then WWW, i.e. ARPANET adopted TCP/IP on January 1, 1983, and from there researchers began to assemble the “network of networks” that became finally the modern Internet. The online world then took on a more recognizable form in 1990, when computer scientist Tim Berners-Lee invented the World Wide Web.

- Emails were invented before the World Wide Web. The inventor of electronic mail Raymond Tomlinson sent the first email to himself in 1971 via a computer network called ARPANET at that time, 18 years before the World Wide Web was invented.

Definition of Web

- The World Wide Web is an architectural framework for accessing linked web documents in the form of web pages spread out over millions of machines/web servers all over the world, distributed separately, and typically connected by the Internet.

- The World Wide Web is a digital information space where public web documents and other related web resources are stored & interconnected in an organized way and identified by URLs, may interlinked by hypertext links, and can be accessed via the Internet mostly by the client.

- WWW is a hypermedia-based software technology that allows the consolidation of hypertext, graphics, audio, video, and multimedia to provide information on almost every topic/subject/area.

- WWW is a collection of text documents and other resources, linked by hyperlinks and URLs, usually accessed by web browsers, from web servers.

Features of WWW

- The terms Internet and World Wide Web are often considered similar. However, they are not the same. The Internet is a global system of interconnected computer networks whereas the World Wide Web is one of the services transferred over these networks.

- Many hostnames used for the World Wide Web begin with www because of the long-standing practice of naming Internet hosts according to the services they provide.

- The hostname for a web server is often www, similar to ftp for an FTP server, and news or nntp for a USENET news server.

- The use of www is not required by any technical or policy standard and many websites do not use it.

- When a user submits an incomplete domain name to a web browser in its address bar input field, some web browsers automatically try adding the prefix “www” to the beginning of it and possibly “.com”, “.org” and “.net” at the end, depending on what might be missing.

- WWW is a search tool that helps us to find and retrieve information in the form of the web.

- WWW supports several TCP/IP services.

- WWW Provides a single interface to find many sources.

- HTTP is the protocol used to transmit all data present on the World Wide Web(WWW).

- HTML is the standard language of WWW along with CSS and JavaScript.

Architecture

- The Web consists of a vast, worldwide collection of documents or Web pages, often just called pages for short. Each page may contain one or more links to other pages anywhere in the world. Users can follow/open a link by simply clicking on it. This process can be repeated indefinitely to reach that page.

- Working Mechanisms:

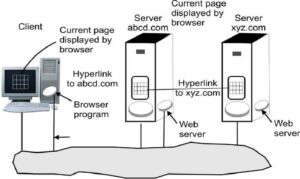

- The basic working model of the Web is shown in the figure. Here the browser is displaying a Web page on the client machine. When the user clicks on a line of text/hypertext i.e. linked to a page on the say abcd.com server, the browser follows the hyperlink by sending a message to the said abcd.com server asking it for the page. When the page arrives, it is displayed on the browser of the client machine. If this page contains a hyperlink to a page(called an embedded hyperlink) on the saying xyz.com server that is clicked on, the browser then sends a request to that machine for the page, and so on indefinitely.

-

- Typically, Web Pages are named or identified using URLs (Uniform Resource Locators). A typical URL, for example, is http://www.abcd.com/products.html. A URL has three parts: the name of the protocol (http), the DNS name of the machine where the page is located (say www.abcd.com), and the name of the file containing the page (say products.html).

- Viewing a web page on the World Wide Web normally begins either by typing the URL of the page into a web browser or by following a hyperlink to that page or resource. The web browser then initiates a series of background communication messages to fetch and display the requested page. When a user clicks on a hyperlink, the browser carries out a series of steps to fetch the page pointed to.

- The steps that occur when the link/hyperlink is selected/executed are:-

Step 3: DNS replies with a specific destination Server IP address say 156.106.192.32.

Step 4: The browser makes a TCP connection to port 80 on 156.106.192.32.

Step 5: It then sends over a request asking for file products.html.

Step 6: The http://www.abcd.com server sends the file products.html.

Step 7: The browser displays all the text in products.html.

Step 8: The browser fetches and displays all images in this file.

-

- The whole working mechanism of WWW can be understood in two forms:-

At Client Side

-

-

- When a server returns a page as a result of a web browser request, it also returns some additional information about the page. This information includes the MIME type of the page. Pages of type text/html are just displayed directly, as are pages in a few other built-in types. If the MIME type is not one of the built-in ones, the browser consults its table of MIME types to tell it how to display the page.

- There are two techniques used in displaying the web page by the browser: plug-ins and helper applications.

- A plug-in is a code module that the browser fetches from a special directory on the disk and installs as an extension to itself, because plug-ins run inside the browser, they have access to the current page and can modify its appearance. After the plug-in has done its job (usually after the user has moved to a different Web page), the plug-in is removed from the browser’s memory. During the use of plug-ins, each browser has a set of procedures that all plug-ins must implement so the browser can call the plug-in. For example, there is typically a procedure the browser’s base code calls to supply the plug-in with data to display. This set of procedures is the plug-in’s interface and is browser-specific.

-

-

-

-

- The other way to extend a browser is to use a helper application. This is a complete program, running as a separate process. Since the helper is a separate program, it offers no interface to the browser and makes no use of browser services. Instead, it usually just accepts the name of a scratch file where the content file has been stored, opens the file, and displays the contents. Typically, helpers are large programs that exist independently of the browser, such as Adobe’s Acrobat Reader for displaying PDF files or Microsoft Word. Some programs (such as Acrobat) have a plug-in that invokes the helper itself. Many helper applications use the MIME-type application. A considerable number of subtypes/file extensions have been defined, for example, application/pdf for PDF files and application/MS word for Word files, and so on. In this way, a URL can point directly to a PDF or Word file, and when the user clicks on it, Acrobat or Word is automatically started and handed the name of a scratch file containing the content to be displayed. Helper applications are not restricted to using the application MIME type. Adobe Photoshop uses image/x-photoshop and Real One Player is capable of handling audio/mp3 files.

-

-

Consequently, browsers can be configured to handle a virtually unlimited number of document types with no changes to the browser. Modern Web servers are often configured with hundreds of type/subtype combinations and new ones are often added Application Layer every time a new program is installed.

-

-

- Browsers can also open local files on the client machines, rather than fetching them from remote Web servers. Since local files do not have MIME types, the browser needs some way to determine which plug-in or helper to use for types other than its built-in types such as text/html and image/jpeg. To handle local files, helpers can be associated with a file extension as well as with a MIME type. Some browsers use the MIME type, the file extension, and even information taken from the file itself to guess the MIME type.

- In particular, Internet Explorer relies more heavily on the file extension than on the MIME type when it can.

- All a malicious Web site has to do is produce a Web page with pictures of, say, movie stars or sports heroes, all of which are linked to a virus. A single click on a picture then causes an unknown and potentially hostile executable program to be fetched and run on the user’s machine. To prevent unwanted guests like this, Internet Explorer can be configured to be selective about running unknown programs automatically, but not all users understand how to manage the configuration.

-

At Server Side

-

- As we saw on the client side, when the user types a URL in the address bar or clicks on a line of hypertext, the browser parses the URL and interprets the part between http:// and the next slash as a DNS name to look up. Armed with the IP address of the server, the browser establishes a TCP connection to port 80 on that server. Then it sends over a command containing the rest of the URL, which is the name of a file on that web server. The server then returns the file for the browser to display.

- The steps that the server performs after client request in its main loop are as follows:

1. Accept a TCP connection from a client (a browser).

2. Get the name of the file requested.

3. Get the file (from the server disk).

4. Return the file to the requested client.

5. Release the TCP connection. - Modern Web servers have some more advanced features than the above steps, but in essence, this is what a Web server does. A problem with this design is that every request requires disk access to get the file. The result is that the Web server cannot serve more requests per second than it can make disk accesses. One obvious improvement (used by all Web servers) is to maintain a cache in memory of the most recently used files. Before going to disk once again to get a file, the server checks the cache. If the file is there, it can be served directly from memory, thus eliminating disk access. Although effective caching requires a large amount of main memory and some extra processing time to check the cache and manage its contents, results from the savings in time are nearly always worth the overhead and expense.

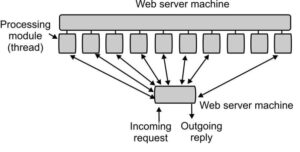

- The next step for building a faster server is to make the server multi-threaded.

- In one design, the server consists of a front-end module that accepts all incoming requests and n-processing modules, as shown in the above image. The n + 1 threads all belong to the same process so the processing modules all have access to the cache within the process’ address space. When a request comes in, the front end accepts it and builds a short record describing it. It then hands the record to one of the processing modules.

- In another possible design, the front end is eliminated and each processing module tries to acquire its own requests, but a locking protocol is then required to prevent conflicts.

A multithreaded Web server with a front end and processing modules first checks the cache to see if the file needed is there. If so, it updates the record to include a pointer to the file in the record. If it is not there, the processing module starts a disk operation to read it into the cache (possibly discarding some other cached files to make room for it. When the file comes in from the disk, it is put in the cache and also sent back to the client. The advantage of this scheme is that while one or more processing modules are blocked waiting for a disk operation to complete (and thus consuming no CPU time), other modules can be actively working on other requests. Of course, to get any real improvement over the single-threaded model, it is necessary to have multiple disks, so Application Layer more than one disk can be busy at the same time. With n processing modules and n disks, the throughput can be as much as n times higher than with a single-threaded server and one disk.

- In theory, a single-threaded server and n disks could also gain a factor of n, but the code and administration are far more complicated since normal blocking READ system calls cannot be used to access the disk. With a multithreaded server, they can be used since READ blocks only the thread that made the call, not the entire process.

- Modern Web servers do more than just accept file names and return files. In fact, the actual processing of each request can get quite complicated. For this reason, in many servers, each processing module performs a series of steps. The front end passes each incoming request to the first available module, which then carries it out using some subset of the following steps, depending on which ones are needed for that particular request-

Step 1 : Resolve the name of the Web page requested

This step is needed because the incoming request may not contain the actual name of the file as a literal string. For example, consider the URL http://www.cs.vu.nl, which has an empty file name. It has to be expanded to some default file name. Also, modern browsers can specify the user’s default language (e.g., Italian or English), which makes it possible for the server to select a Web page in that language, if available. In general, name expansion is not quite so trivial as it might at first appear, due to a variety of conventions about file naming.

Step 2 : Authenticate the client

This step consists of verifying the client’s identity. This step is needed for pages that are not available to the general public.

Step 3 : Perform access control on the client

This step checks to see if there are restrictions on whether the request may be satisfied given the client’s identity and location.

Step 4 : Perform access control on the Web page

This step checks to see if there are any access restrictions associated with the page itself. If a certain file (e.g., .htaccess) is present in the directory where the desired page is located, it may restrict access to the file to particular domains, for example, only users from inside the company.

Steps 5 and 6 : Check the cache and Fetch the requested page from the disk

The step 5 involves getting the page from the cache file and if not found then Step 6 needs to be able to handle multiple disk reads at the same time for that file.

Step 7 : Determine the MIME type to include in the response

This step is about determining the MIME type from the file extension, the first few words of the file, a configuration file, and possibly other sources.

Step 8 : Take care of miscellaneous odds and ends

This step is for a variety of miscellaneous tasks, such as building a user profile or gathering certain statistics.

Step 9 : Return the reply to the client

This step is where the result is sent back and

Step 10 : Make an entry in the server log

-

-

- This step makes an entry in the system log for administrative purposes. Such logs can later be mined for valuable information about user behavior, such as the order in which people access the pages.

- If too many requests come in each second, the CPU will not be able to handle the processing load, no matter how many disks are used in parallel. The solution is to add more nodes (computers), possibly with replicated disks to avoid having the disks become the next bottleneck. A front end still accepts incoming requests but sprays them over multiple CPUs rather than multiple threads to reduce the load on each computer. The individual machines may themselves be multithreaded and pipelined as before.

- One problem with server farms is that there is no longer a shared cache because each processing node has its own memory unless an expensive shared-memory multiprocessor is used. One way to counter this performance loss is to have a front end keep track of where it sends each request and send subsequent requests for the same page to the same node. Doing this makes each node a specialist in certain pages so that cache space is not wasted by having every file in every cache.

- Another problem with server farms is that the client’s TCP connection terminates at the front end, so the reply must go through the front end. Sometimes a trick, called TCP handoff, is used to get around this problem. With this trick, the TCP endpoint is passed to the processing node so it can reply directly to the client. This handoff is done in a way that is transparent to the client.

-

Versions of Web

There are the following versions of Web/WWW –

-

Web 1.0

-

Web 1.0 refers to the early days of the World Wide Web, typically from the early 1990s to the late 2000s.

-

During the Web 1.0 period, the internet was primarily used for browsing and retrieving static information.

-

In this period, websites were predominantly static, consisting of basic HTML pages with text, images, and hyperlinks. This time the web page had little to no interactivity or dynamic content.

-

The primary focus of Web 1.0 was on the consumption of information rather than its creation or sharing. Most content was created and controlled by website owners.

-

Social media platforms as we know them today did not exist during the Web 1.0 era. Communication and interaction between users were limited to email, forums, and chat rooms.

-

Search engines like Yahoo!, AltaVista, and later Google emerged during this period to help users find information on the web.

-

In this period, Internet access was primarily through dial-up connections, which were slow and often limited by connection speeds and phone line availability.

-

In Web 1.0, users could only consume content passively. Interaction with websites was mostly limited to clicking hyperlinks to navigate between pages.

-

Thus, finally, we can say that Web 1.0 laid the foundation for the modern internet but lacked the interactivity, user-generated dynamic content, and social features that characterize the later stages of web development.

-

-

Web 2.0

-

Web 2.0 refers to the second stage in the evolution of the World Wide Web.

-

Web 2.0 is characterized by a shift towards dynamic and interactive user-generated content and collaboration.

-

Web 2.0 emerged in the mid-2000s and continues to shape the internet landscape today.

-

Unlike Web 1.0, where content was primarily created and controlled by website owners, Web 2.0 allows users to generate and contribute content. This includes blogs, social media posts, wikis, and multimedia-sharing platforms like YouTube.

-

Social networking platforms such as Facebook, Twitter, LinkedIn, WhatsApp, Telegram, and Instagram became prominent in the Web 2.0 era. These platforms facilitate user interaction, communication, and content sharing on a large scale worldwide.

- Web 2.0 encourages collaboration and participation among users. Wikis allow multiple users to edit and contribute to content collaboratively, while platforms like Google Docs enable real-time collaborative document editing.

- Websites in the Web 2.0 era offer more interactivity and dynamic content. Features such as AJAX (Asynchronous JavaScript, XML, and JSON) enable smoother, more responsive web interfaces, leading to a richer user experience.

- Web 2.0 brought about the rise of web-based applications that mimic the functionality of traditional desktop software. These applications, often referred to as “web apps,” run entirely in a web browser and offer services ranging from email and document editing to project management and multimedia editing.

- Web 2.0 platforms often employ algorithms to personalize content based on user preferences and behavior. This personalization enhances user experience by providing relevant and tailored content.

- Thus, overall, Web 2.0 revolutionized the way people interact with the Internet, emphasizing user participation, collaboration, and dynamic content creation. It laid the groundwork for many of the social, technological, and economic developments that have shaped the modern digital landscape.

-

-

Web 3.0

-

Web 3.0, often referred to as the “Semantic Web” or “Decentralized Web”

-

Web 3.0 represents the next phase in the evolution of the World Wide Web.

-

Web 3.0 generally refers to a vision of the internet where information is not only accessible by humans but also easily understood and processed by computers.

-

This Web aims to make web content more meaningful by adding metadata, annotations, and structured data to web pages. This metadata helps computers understand the context and meaning of information, enabling more sophisticated searches, data integration, and automated reasoning.

- This Web shows the Linked Data concept which is a method of publishing structured data on the web using standard formats and protocols such as RDF (Resource Description Framework) and SPARQL (SPARQL Protocol and RDF Query Language). By linking related data across different sources, Linked Data facilitates data integration and interoperability, allowing computers to navigate and analyze vast amounts of information.

- AI and machine learning technologies play a crucial role in Web 3.0 by enabling intelligent data processing, natural language understanding, and personalized recommendations. These technologies power virtual assistants, chatbots, and recommendation systems that enhance user experience and automate tasks on the web.

- Decentralization is a core principle of Web 3.0, aiming to reduce reliance on centralized authorities and intermediaries. Blockchain technology, in particular, is instrumental in decentralizing various aspects of the web, including digital currencies (e.g., Bitcoin), decentralized finance (DeFi), and decentralized applications (dApps).

- Web 3.0 promotes interoperability between different systems, platforms, and data sources, allowing seamless data exchange and collaboration across the web. Standards such as W3C’s Web Ontology Language (OWL) and JSON-LD (JSON for Linked Data) facilitate interoperability by defining common data formats and vocabularies.

- Web 3.0 emphasizes user privacy and security by design, using encryption, decentralized identity systems, and cryptographic techniques to protect user data and ensure secure communication and transactions on the web.

- Thus, Web 3.0 represents a paradigm shift towards a more intelligent, decentralized, and interconnected web, where data is not only accessible but also meaningful and actionable for both humans and machines. While many of the technologies and concepts associated with Web 3.0 are still in development, they have the potential to reshape the internet and unlock new possibilities for innovation, collaboration, and digital empowerment.

-

-

Web 4.0

- In January 2022, the term “Web 4.0” came into existence which is not widely recognized or formally defined in the same way as Web 1.0, 2.0, or 3.0 but considered.

- The concept of Web 4.0 is based on technological advancements and trends, it might involve further advancements in areas such as artificial intelligence, augmented reality, virtual reality, the Internet of Things (IoT), and possibly new paradigms such as quantum computing and advanced robotics.

- Web 4.0 might involve Intelligent Internet of Things (IoT) devices equipped with advanced AI algorithms that enable them to make autonomous decisions, communicate more intelligently, and adapt to changing environments.

- Web 4.0 could integrate Augmented Reality (AR) and Virtual Reality (VR) technologies more seamlessly into the online experience, blurring the lines between the physical and digital worlds. This could lead to immersive online shopping experiences, virtual meetings, and educational simulations.

- Web 4.0 might focus on even more advanced personalization techniques, using AI to anticipate users’ needs and preferences on a hyper-individualized level.

- As quantum computing matures, there might be efforts to develop a quantum internet, which would enable secure communication and computing protocols based on quantum principles.

- With increasing concerns about AI bias, privacy, and data security, Web 4.0 could prioritize ethical considerations in AI development and implement stricter regulations for data governance.

- Web 4.0 might introduce new modes of advanced human-computer interaction, such as brain-computer interfaces or gesture-based controls, enabling more natural and intuitive ways of interacting with digital systems.

- Building upon the decentralized principles of Web 3.0, Web 4.0 might further explore the potential of blockchain and distributed ledger technologies for secure, transparent, and tamper-proof data management and transactions.

- Thus, the term “Web 4.0” is speculative and not yet widely accepted or defined within the technology community. The evolution of the web is continuous and nonlinear, with advancements occurring across various domains and paradigms.

![]()

0 Comments