Introduction of Multi-threading in Java

- Multi-threading in Java is also sometimes called Thread-based Multitasking.

Definition

- Multi-threading is a process of executing more than one thread concurrently/simultaneously of the same process to complete it effectively at the micro level.

Features/Characteristics of Thread

- A Java thread is a lightweight sub-process or smallest part of a process.

- Thread is the smallest executable unit of a process.

- A thread is a single sequence of execution that can run independently in an application.

- A thread has its path of execution in a process.

- A process can have one/multiple threads.

- In a single-processor system, only a single thread of execution occurs at a given instant of time.

- Java is one of the first languages that had a built-in support multi-threading facility.

- In a Java program, multiple threads are executed concurrently which finally increases the utilization of the CPU and gives efficient output.

- Every thread has its lifetime.

- All threads of the same process, share a common memory i.e., threads are stored inside the memory of a process. As the threads are stored in the same memory space, communication between threads (Inter Thread Communication) is fast.

- Context Switching :

- The CPU quickly switches back and forth between several threads (called Context Switching) to create an illusion that the threads are executing in parallel, concurrently, or at the same time.

- Context switching from one thread to another thread also occurs during the execution of a process and is less expensive.

- Each thread normally runs parallel to each other.

- Threads don’t allocate separate memory areas to run rather use shared memory, hence it saves memory.

- Each instruction sequence for a thread has its own unique flow of control that is independent of all other threads. These independently executed instruction sequences are a thread.

- Threads are independent in execution because they all have separate paths of execution i.e. if an exception occurs in one thread, it doesn’t affect the execution of other threads.

- Any application can have multiple processes (instances). Each of these processes may be of a single thread or multiple threads.

Advantage

- It reduces the maintenance cost of the application.

- It supports maximum resource utilization of the system on which the application(s) is running.

- Thread supports concurrency in Java i.e. concurrency can be used within a process to implement multiple instances of simultaneous services.

- Multi-threaded programming is very useful in network as well as Internet application development.

- Multi-threading requires less processing overhead than multi-programming because concurrent threads can share common resources more efficiently.

- Multi-threading enables programmers to write very efficient programs that make maximum use of the CPU.

- A multi-threaded web server is one of the best examples of multi-threaded programming where they can efficiently handle multiple browser requests at a time and handle one request per processing thread (browser).

- An individual thread can be controlled easily without affecting others i.e. we can pause a thread without stopping other parts/threads of the program. A paused thread can restart again.

Disadvantage

- The debugging and testing process of the thread program is complex.

- The output of the thread program(especially intermediate output) is sometimes unpredictable.

- Context switching in thread programs causes process overhead.

- Thread program Increases the potential for deadlock occurrence.

- Increases difficulty level in writing thread program.

- Requires more synchronization of shared resources (objects, data).

Types of Thread

- When we start any multithreaded program such as Java, one thread begins running first/immediately, which is called the Main thread of that program. Within the main thread, we can create many other Child threads as per the needs of the program. These child threads may be user threads as well as daemon threads. The main thread is created automatically by JVM when the program is started. The main thread of Java programs is controlled through an object of the Thread class.

- By using a static method called current Thread ( ), we can get a reference to the thread in which this method is called. Once we have a reference to the main thread, we can control it just like other threads created.

- A thread is said to be Dead when the processing of the thread is completed normally. After a thread reaches the dead state, then it is not possible to restart it.

- There are two types of thread – user thread and daemon thread. We can convert the user thread into a daemon thread explicitly(when needed) by using the setDaemon() method of the thread.

User Thread

-

- When a thread application starts, a user thread is created first and after that, we can create many user threads or daemon threads as per need.

- User threads are threads that are created by the application or user.

- These threads perform the completion of the user’s application/jobs.

- These are normally high-priority threads.

- These threads are foreground threads.

- JVM (Java Virtual Machine) will not exit completely until all user threads finish their execution. JVM waits for these threads to finish their task.

Daemon Thread

-

- These are threads that are mostly created by the JVM as per requirements.

- These threads always run in the background.

- These threads perform the completion of system-related jobs mainly but indirectly help in completing the user’s job.

- These threads are used to perform some specific background tasks like garbage collection, cleaning the application, and other housekeeping tasks.

- These threads are normally less-priority threads.

- JVM will not wait for these threads to finish their execution. JVM will exit as soon as all user threads finish their execution. JVM doesn’t wait for daemon threads to finish their task.

Life Cycle of a Thread/Thread State/Thread Model

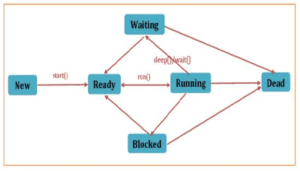

- The thread exists as an object and has six well-defined states. At any point in time, the thread will be in any one of these states.

- java. lang. Thread class has one member of the enum type called State. All states of a thread are stored in this enum as constants. These are:-

New State

-

- It is the first state of the thread life cycle.

- When we create an instance of the Thread class, the created newly born thread is in a new state.

- A thread that has not yet started is supposed to be in this state.

- A new thread begins its life cycle as a new state. It remains in this state until the program starts the thread.

- It is also referred to as a born thread.

- When a thread is created i.e. in the new state, it doesn’t begin executing immediately rather we first invoke the start ( ) method to start the execution of the thread.

Ready/Runnable State

-

- After a newly born thread is started, the thread becomes runnable.

- A thread in this state is considered to be executing its task.

- In this state, the thread scheduler must allocate CPU time to the Thread.

- A thread may also come back into this ready state again (when it was stopped for a while during processing time due to some reason)to resume its execution after fulfilling conditions.

Running/Execution State

-

- It is the third state of Thread and comes after Ready state.

- The Java thread is running inside JVM.

- Threads are born to run, and a thread is said to be running when it is executing.

- A running thread may perform several actions. A common example is when the thread performs some type of input or output operation.

Waiting/Suspended State

-

- A running thread can be suspended, mostly temporarily suspending its activity, due to the lack of some signals/instructions or other lacking factors.

- A suspended thread can be resumed by regaining required signals or related lacking factors, allowing it to pick up again where it left off.

- In this state, a thread waits for another thread to perform a task.

- A suspended thread is back to the runnable state only when another thread signals the waiting thread to continue executing.

- A thread will be in this state when the wait() or join() method is called.

- A thread may remain waiting for up to an indefinite time until the waiting condition is fulfilled.

- A waiting state may be a TIMED_WAITING i.e. It is a runnable thread that can enter into the timed waiting state for a specified interval of time. In this state, a thread is waiting for another thread to act for up to a specified waiting time. A thread in this state transitions back to the runnable state when that time interval expires or when the event it is waiting for occurs.

Blocked State

-

- A Java thread can be blocked when waiting for a resource normally.

- A thread is said to be in this state when a thread is in a deadlock state.

Terminated/Dead State

-

- A runnable thread enters the terminated state when it completes its task normally or otherwise terminates.

- A thread can be terminated, which halts its execution immediately at any given time.

- Once a thread is terminated, it cannot be resumed.

The life-cycle of a thread can be summarized as –

A thread begins as a ready thread using the start () method and then enters the running state using the run() method when the thread scheduler schedules it. When the thread is prompted by other threads then it returns to the ready state again, or it may wait on a resource, or simply stop for some time. When this happens, the thread enters the waiting/blocked state. To run again from the waiting/blocked state, the thread must re-enter the ready state. Finally, the thread will cease its execution after normal completion and enter into the dead state.

Syntax of Thread

- The multi-threading system in Java is built upon the Thread Class (having several methods that help in managing threads) and an interface called, Runnable.

- To create a new thread, we can use the Thread class (in the form of inheritance) to either extend the Thread Class or by implementing/using the Runnable interface.

- Thus, a new thread can be created through two ways:-

1. By extending the Thread class

2. By implementing the Runnable Interface (The easiest way to create a thread)

Priority of Threads

- We can also set different priorities value to different Threads as per need but it doesn’t guarantee that a higher priority thread will execute first than a lower priority thread.

- The thread scheduler is a program and is part of the operating system that implements thread i.e. when a thread is started, its execution is controlled by Thread Scheduler and JVM doesn’t have any control over its execution. Once a thread pauses its execution, we can’t say/specify when it will get a chance again, it depends on the thread scheduler of the system.

- Since a Java application shows multi-threading features and hence every Java thread has a unique priority positive integer value that helps the operating system determine the order in which threads are scheduled/executed during processing time on a priority basis.

- A thread with the highest priority is chosen first for execution then the thread with lower priority and so on.

- There are two common thread priority methods present in Java. lang.Thread class. They are setPriority() and getPriority() methods. Here setPriority() method is used to set a new/change the old priority value of a thread and the getPriority() method is used to retrieve the priority value of a thread.

- Java threads’ priorities lie in the range between MIN_PRIORITY (1) and MAX_PRIORITY (10) but every thread, by default, has NORM_PRIORITY (5). The default priority of a thread is the same as that of its parent.

- Threads with higher priority are more important for a program/system and should be allocated processor time first than lower-priority threads. However, thread priorities cannot guarantee the order in which threads execute and are very much platform-dependent.

- Thread priorities are given with a positive integer value which decides how one thread should be treated for the others.

- Thread priority decides when to switch from one running thread to another, this process is called context switching.

- A thread can be preempted by a higher-priority thread no matter what the lower-priority thread is doing. Whenever a higher-priority thread wants to run it does.

Thread Methods

There are so many thread methods but some common and popular thread methods used in several thread applications are as follows:-

- getName(): It is used for obtaining a thread’s name as a string.

- getPriority(): It is used for obtaining a thread’s priority value from 1 to 10 as an integer.

- interrupt(): It stops the running of a thread.

- isAlive(): It is used to check/determine if a thread is still running or not.

- join():

- Wait for a thread to terminate.

- This method is used to join the start of a thread’s execution to the end of another thread’s execution such that a thread does not start running until another thread ends.

- If join() is called on a Thread instance, the currently running thread will block until the Thread instance has finished executing.

- The join() method waits at most this many milliseconds for this thread to die. Here a timeout of 0 means to wait forever.

- notify(): It wakes up one single thread called wait() on the same object. The calling notify() does not give up a lock on a resource. It tells a waiting thread that that thread can wake up. However, the lock is not given up until the notifier’s synchronized block has been completed.

- notifyAll(): It wakes up all the threads that are called wait() on the same object. The highest priority thread will run first in most situations, though not guaranteed. Other things are the same as the notify() method above.

- run(): Entry point for the thread.

- setName(): It is used for changing or putting a new name of a thread.

- sleep(): Suspend/pause/sleep a running thread for a specified period in milliseconds and then restart again after that time.

- start(): start a thread by calling its run() method.

- states(): gives thread current states.

- wait():

- When we call the wait() method, this forces the current thread to wait until some other thread invokes notify() on the same object i.e. It tells the calling thread to give up the lock and go to sleep until some other thread enters the same monitor and calls notify().

- The wait() method releases the lock before waiting and reacquires the lock before returning from the wait() method.

- The wait() method is tightly integrated with the synchronization lock.

- yield():

- The yield method is used to pause the execution of the currently running process so that other waiting threads with the same priority will get the CPU to execute.

- Threads with lower priority will not be executed using the yield method.

- It temporarily stops the execution of currently running threads and gives a chance to the other remaining waiting threads of the same priority to execute.

- This method is applied mainly when a currently running thread is not doing anything particularly so important and if other threads or processes need to be run having the same priority, they should give a chance and run.

Inter-Process Communication

- Inter-process communication (IPC) is the mechanism and techniques used by processes during the execution of a program running on a computer system to exchange data, signals, and synchronize their activities.

- IPC allows processes to communicate and coordinate with each other, enabling collaboration and interoperability within a multi-process environment.

- The IPC mechanism is usually provided by the operating system(OS).

- The choice of IPC mechanism depends on factors such as performance requirements, complexity, scalability, and the specific needs of the application or system architecture.

- inter-process communication is crucial for building complex and scalable computer systems, enabling processes to communicate, coordinate, and collaborate effectively to achieve desired functionality and performance.

- There are several approaches/methods are used to implement IPC among processes, which are as follows: –

-

-

Shared Memory:

-

Shared memory involves the allocation of a common memory area that multiple processes can access/share concurrently.

-

Processes can read from and write to this shared memory area, allowing for fast and efficient data exchange.

-

However, shared memory requires careful synchronization to prevent race conditions and ensure data consistency.

-

-

Message Passing:

-

Message passing involves the exchange of messages between processes through a communication channel, such as pipes, sockets, or message queues.

-

Processes send messages containing data or signals to each other, enabling communication and coordination.

-

Message passing is often used in distributed systems and networked environments.

-

-

Pipes:

-

Pipes are a form of message-passing that allows communication between a pair of processes, typically a parent process and its child process.

-

Pipes have a unidirectional flow of data, where one process writes to the pipe, and the other process reads from it.

-

Pipes are commonly used for inter-process communication in shell scripts and command-line utilities.

-

-

Sockets:

-

Sockets are communication endpoints that allow processes to communicate over a network or locally within the same system.

-

Processes can establish socket connections to send and receive data streams, enabling communication between different machines or processes on the same machine.

-

Sockets are widely used in client-server applications, web servers, and network protocols.

-

-

Message Queues:

-

Message queues provide a mechanism for processes to exchange messages asynchronously through a queue data structure.

-

Processes can send messages to a queue and receive messages from the queue, allowing for reliable and ordered communication between processes.

-

Message queues are often used for inter-process communication in distributed systems and real-time applications.

-

-

Signals:

-

Signals are software interrupts used to notify processes of events or asynchronous notifications.

-

Processes can send and receive signals to handle events such as process termination, user interrupts, or errors.

-

Signals are commonly used for process management and error handling in Unix-like operating systems.

-

-

Remote Procedure Calls (RPC):

-

RPC is a method in IPC that allows processes to call functions or procedures in remote processes as if they were local function calls.

-

RPC frameworks provide a transparent mechanism for invoking remote procedures and passing parameters and return values between processes.

-

RPC is commonly used in distributed computing environments and client-server architectures.

-

- Direct Communication:

- In this way, only one individual link is created or established between the two communicating processes and sharing the information.

- Indirect Communication:

- Indirect communication uses a shared common mailbox.

- Each pair of the process shares multiple communication links.

- These shared links can be unidirectional or bi-directional.

-

Need of Interprocess Communication

Inter-process communication (IPC) is necessary for computer systems because :-

-

-

Data Sharing: IPC provides mechanisms for processes to exchange/share data efficiently and securely.

-

Coordination: IPC facilitates coordination between processes by allowing them to communicate and synchronize their actions.

-

Concurrency: IPC mechanisms help to manage concurrency by providing synchronization primitives for coordinating access to shared resources which is very useful in multi-threaded or multi-process environments.

-

Modularity and Scalability: IPC enables modular design and scalability by allowing processes to be distributed across multiple processors or machines. Processes can communicate over a network or through shared memory, enabling distributed computing and parallel processing.

-

Fault Isolation: IPC can help isolate faults and failures within a system by encapsulating processes and their interactions. If one process fails or encounters an error, it can be isolated from other processes, preventing the failure from affecting the entire system.

-

Interoperability: IPC facilitates interoperability between different software components or systems by providing standard communication interfaces and protocols.

-

Real-time Communication: In real-time systems or applications, IPC enables timely and predictable communication between processes, ensuring that messages are delivered promptly and reliably.

-

Distributed Computing: IPC is essential for distributed computing environments where processes run on different machines or nodes in a network.

-

Synchronization(Inter-Process)

- Inter-process synchronization is essential for coordinating the activities of multiple processes to ensure correct and orderly execution in a concurrent computing environment.

- Synchronization mechanisms prevent race conditions, data corruption, and other concurrency issues that may arise when multiple processes access shared resources concurrently.

- The choice of synchronization mechanism depends on factors such as the nature of the shared resources, the concurrency model, and the synchronization requirements of the application or system.

- Effective synchronization ensures correct and orderly execution of concurrent processes, preventing data corruption, race conditions, and other concurrency-related issues.

- Some common synchronization techniques used to support inter-process communications are:-

-

-

Mutex (Mutual Exclusion):

-

Mutexes are synchronization primitives that allow only one process to access a shared resource or critical section at a time.

-

Processes acquire a mutex before accessing the shared resource and release it when they are done.

-

Mutexes prevent concurrent access to the shared resource, ensuring that only one process can modify it at a time.

-

-

Semaphore:

-

Semaphores are integer variables used to control access to a shared resource by multiple processes. Semaphores have two operations: wait (P) and signal (V). Processes decrement the semaphore value (P operation) before accessing the shared resource and increment it (V operation) when they are done.

-

Semaphores can be used to implement various synchronization patterns, such as counting semaphores and binary semaphores.

-

-

Condition Variables:

-

Condition variables are synchronization primitives used for signaling and waiting for specific conditions to be met.

-

Here, processes can wait on a condition variable until a condition is true, at which point they are awakened and can proceed.

-

Condition variables are often used in conjunction with mutexes to coordinate access to shared data structures and implement producer-consumer patterns.

-

-

Read/Write Locks:

-

Read/write locks allow multiple processes to read from a shared resource concurrently while ensuring exclusive access for writing.

-

Processes acquire a read lock when reading from the resource and a write lock when modifying it.

-

Read locks can be held concurrently by multiple processes, while write locks are exclusive.

-

-

Barrier:

-

A barrier is a synchronization primitive that allows processes to synchronize their execution by waiting for all processes to reach a certain point before proceeding.

-

Processes wait at the barrier until all processes have arrived, at which point they are released simultaneously.

-

Barriers are commonly used in parallel and distributed computing environments to synchronize computation phases.

-

-

Message Passing:

-

Message passing is a synchronization technique where processes communicate by sending and receiving messages through a communication channel.

-

Message passing ensures that processes synchronize their actions by exchanging messages in a coordinated manner.

-

Message passing can be implemented using various IPC mechanisms, such as pipes, sockets, message queues, and remote procedure calls (RPC).

-

-

![]()

0 Comments